Research

https://bib.dbvis.de/uploadedFiles/EuroVis_22__Embedding_Scoring.pdf

Contextualization of Language Models

Despite the success of contextualized language models on various NLP tasks, it is still unclear what these models really learn. This is especially true for function words which are known to behave differently than content words. In preliminary work, we have explored the continuum between function and content words using Visual Analytics and we have also developed LMFingerprints , a novel scoring-based technique for the explanation of contextualized word embeddings. In more recent work, we have focused on the contextualization behavior of negation, coordination, and quantifiers . Check out the relevant publications for more details.

Natural Language Inference

My main research area is Natural Language Inference (NLI). Particularly, I am interested in exploring how current advances in deep learning can be combined with more traditional approaches. To this end, I work on Hy-NLI , a hybrid NLI system that combines the precision of more traditional symbolic approaches with the power and robustness of state-of-the-art deep models, like BERT and XLNet . To gain the precision of traditional symbolic approaches, I build GKR4NLI, a symbolic inference engine, which computes inference based on Natural Logic and GKR . Within the field of NLI, I also focus on the theoretical notion of NLI and on how the task can be refined to better represent the different nuances that human reasoning encompasses. To this end, I investigate closely the SICK corpus, correct portions of it and conduct suitable annotation experiments . Such computational and theoretical aspects are the main focus of my dissertation. Check out the relevant publications for more details.

mezzacotta.net

https://www.tickingmind.com.au/using-comic-strips-teach-inference/

http://naviglinlp.blogspot.com/2019/05/lecture-23-semantic-role-labeling.html

Semantic Parsing

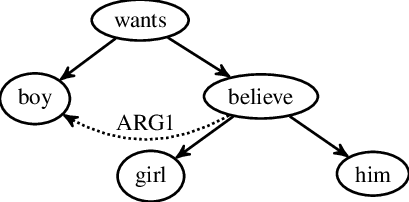

When semantic tasks such as NLI are pursued in a hybrid direction, it is necessary that the symbolic components on which they operate are efficient and high-performing. When these components rely on semantic parsing, then it also becomes essential to have an expressive semantic representation on which inference can be computed. To this end, I work on a novel semantic representation, GKR , which represents a sentence as a layered graph, with different kinds of information included in different layers (subgraphs) for increased expressivity and modularity. Such a representation is suitable not only for symbolic approaches to semantic tasks such as NLI (see GKR4NLI ), but also for hybrid approaches to semantic tasks (see Kalouli et al, 2018 and Hy-NLI ). Check out the relevant publications for more details.

Question Classification

In Konstanz, I was a member of the Research Unit 2111 Questions at the Interfaces. The Research Unit is dedicated to investigating question formation, with a particular emphasis on non-canonical questions (e.g., rhetorical, echo, self-addressed, suggestive). The Unit combines expertise from theoretical, computational and experimental linguistics as well as visual analytics. More precisely, I was a member of the P8 subproject, which focuses on the automatic classification of questions into their types, i.e. non-canonical (non-information-seeking) questions vs. canonical (information-seeking) questions. To this end, we have investigated different learning scenarios and have developed an active learning system that contributes to the faster and more reliable annotation of the necessary large amounts of data on which further training can be performed. Our research has also investigated question classification in multilingual settings . Check out the relevant publications for more details.